Back in the 1900s when I was in 4th grade, the teacher gave us an exercise: Successfully get a robot to sharpen a pencil.

Now this was public school in rural Wisconsin, we didn’t have robots. What we did have was other 4th graders. So, once you hand wrote out your instructions and handed them to the teacher, a fellow student randomly selected a set of instructions and executed them.

Perfectly to the letter.

To inevitable failure and laughter from the entire class.

For even at 9 years old, we make assumptions about the important parts of a task, and we leave out substantial context; either because we don’t appreciate it or we take it for granted.

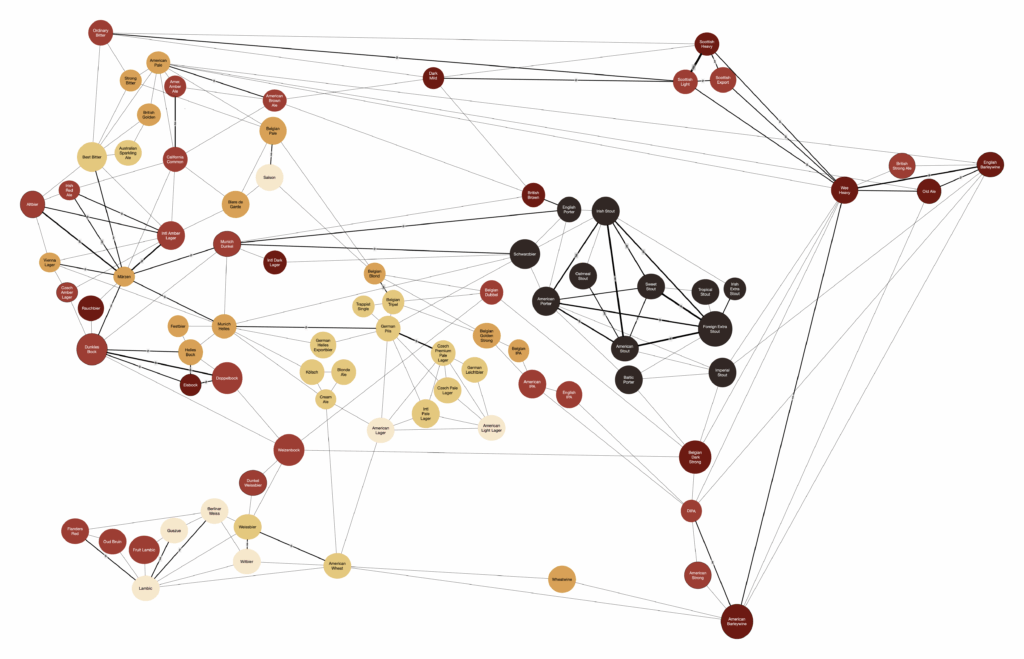

For the past 4 years, I’ve been managing DÉJÀ BRÜ via WordPress, WooCommerce, a Trello board, and a series of Google Sheets. It’s a small competition, so it works well enough. But this year, the fragmentation got to me, so two days after the competition was over I opened up Claude and started vibe-coding a homebrew competition management app.

Since Claude Code starts a $20/month, I just started with the chatbot, and had it generate a shell script to generate the app, then about half way through the week, I paid the $20 and it sped things up considerably.

But let’s set the full context.

Between 2000-2010, I taught myself PHP, MySQL, Ruby, Rails, and Javascript. I had a shelf of books covering all 5. If you dig back into this blog, you’ll find loads of posts from that era.

However in the intervening years, I’ve done little programming either for myself or others. Turns out, even maintaining a development environment takes deliberate effort. I grew tired of chasing the latest hotness (e.g. Node.js). I grew weary of managing server up-time and fixing bugs. So I stopped.

Then, in fall of 2021, I got an idea for an app to help me organize my genealogy research, so I dusted off my Rails skills and started building. When I completed the research project, I turned the app off and haven’t visited it since.

So, it’s been 5 years since I’ve used any software I’ve written and at least a decade since anyone else has.

Seems like it might be time.

As of this writing, I’ve spent $20 and Claude Code has helped me with 3 apps.

- A homebrew competition management app (~12hrs, from scratch, currently deployed on a test server)

- A homebrew recipe management app (~6hrs; from scratch, currently migrating my recipes into it, still local)

- The aforementioned genealogy research app (~3hrs; updating to Rails 8, and adding mapping, still local)

And that’s just been in the last week.

I’m absolutely left with the sense that vibe-coding is a misnomer, it’s more like technical writing, more like clearly articulating how to sharpen a pencil to a 4th grader.

Here’s my initial prompt for the competition management app:

create a ruby on rails app for beer judging.

2-3 judges per flight of 5-6 entries. homebrew competition, bjcp-sanctioned, but arbitrary styles could be select, from full BJCP to just a single historic style not recognized by the BJCP. actors: judges, entrants, stewards, organizer roles; cellar, judge coordinator, event coordinator, admin. entrants submit their own entries, some competitions may be paid, others may be free entrants get downloadable scoresheet PDF, publicly available leaderboard. devise for auth, unless Google OAuth would be easier. payment via Paypal. full rails with views. probably Heroku for deployment. the minimum I'd like; entrants enter beers, judges can register, organizer and assign beers to categories, organizer + judge coordinator can assign judges to flights while avoiding any potential entry conflicts, judges can evaluate a beer, entrants can see evaluation within their account.As you can see, I had some requirements.

Now, there have been a few pleasant surprises at what Claude implements outside of my request (feature or bug?), and those suggestions usually force me to really consider what I want and how I want it to work. I find Claude’s code is readable and I’m comfortable making edits to it.

I tried Cursor for one of these vibe-coding sessions and found that while the code was somewhat higher quality (more readable, more concise), Cursor was a far worse business analyst, asking fewer clarifying questions, stubbing out worse features unprompted.

By the time Weekend Update started on Saturday night, I had deployed the homebrew competition management app to a test server and shared a link with my co-organizers. However, that in itself took 3 hrs – which is well within my past estimates of deployment taking 50% of the development time to date.

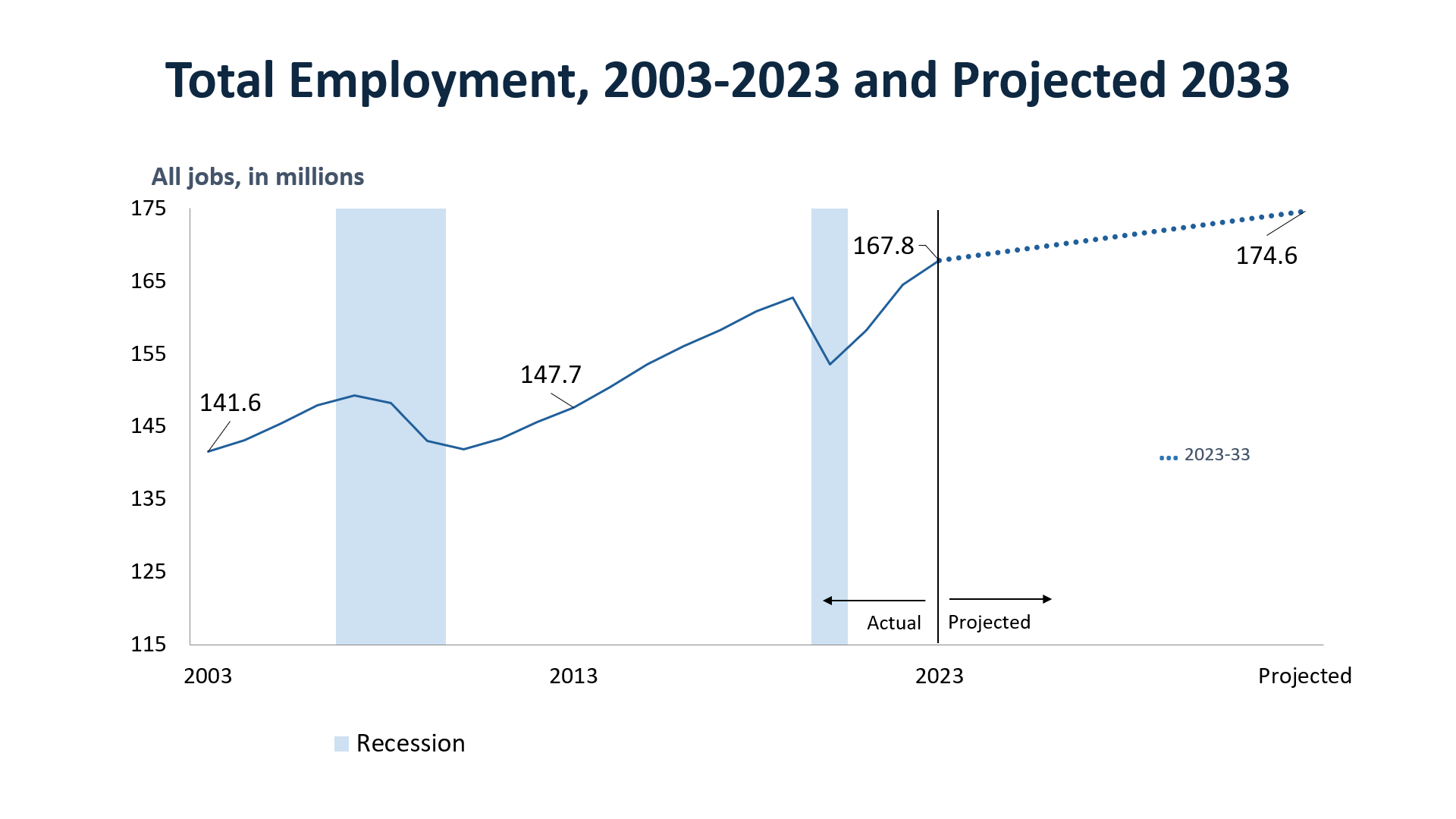

In the end, the primary reasons I hadn’t committed to building any of this software before: opportunity cost.

Why build custom software if Google Sheets works?

Why build custom software if stable, maintained, equivalents exist for $30/year.

I’m not sure that having an expert Rails developer at my fingertips for $20/mn substantially changes that. If anything, all this highlights is that writing the software was never the hard part – having an incentive to maintain it is.

And none of these 3 apps yet have that.

To this point, when I asked Claude to:

help me create a shell script to generate a ruby on rails app for managing homebrew beer, mead, and cider recipesIt’s initial response was:

Before diving into script generation, a few probing questions to make sure this is actually worth building right:What's the actual gap? Brewfather, Beersmith, and Brewtarget already exist. What do they not do for you?What's the scope? "Managing recipes" is vague. Are you tracking ingredients, fermentation logs, batch history, tasting notes, calculated ABV/IBU/SRM, scaling, something else?Who's using this? Just you locally, or are you thinking multi-user, hosted, shared recipes?Why Rails specifically? For a personal recipe manager, Rails might be overkill. Have you considered the tradeoff vs. a simpler tool?Even Claude itself is aware of opportunity cost and was comfortable discouraging me right from the start. Not a bad thing to start with, “hey, have you actually tried the existing options?”

I have, and are my preferences worth the trade-offs? Unlikely. But here we go!

And now I’ve hit my rate limits without even knowing what they are, and it looks like they won’t reset for 4-5 days. Jeebus. These tools are highly unstable. More and more like the toy giraffe I evoked a year ago, the more you engage with them, the worse they are.

After being rate-limited on Claude yesterday, I did the next obvious thing and loaded up GitHub CoPilot, paid $10 to keep going. Quality seems just as good – if not slightly better, as CoPilot actually cares about specs and tests.